Monthly Archives: January 2014

Complacency is not a 21st century skill

Preparing for spring term, I have been reading Remaking the American University (Zemsky, Wegner & Massy, 2002), Shackelton’s Way (Morrell & Chapparell, 2003) and Tom Peters’ Re-Imagine! (2003). Today I took a break from assigned reading to catch up on blogs, wading through the “Happy New Year!” tweets to find some nuggets of insight and information in the realms of higher education and technology.

I had the time to read Michael Feldstein’s lengthy post about Pearson’s business strategy going into the new year focusing on learning assessment and outcomes measurement through “efficacy” evaluations (Can Pearson Solve the Rubric’s Cube?, Dec 31, 2013). Because I have a background in med ed, the term “efficacy” is not lost on me; it doesn’t feel foreign. I didn’t have one of the self-satisfying sarcastic reactions Michael thoughtfully included in his post (“Wait. You mean to tell me that, for all of those educational products you’ve been selling for all these years, your product teams are only now thinking about efficacy for the first time?”). I easily see past the term to recognize the opportunity it (or any similar term; pick your poison) symbolizes. I agree with Michael that the important thing here is the discourse the project encourages.

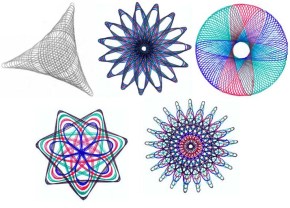

What Pearson is doing isn’t the same thing as what teachers are doing but the model, I think, can be applied to programs, academic departments, and institutions. What came to mind as I read the post and thought about outcomes and efficacy evaluations was Spirograph – simple and complex, beautiful from a distance, generally flawed upon close inspection.

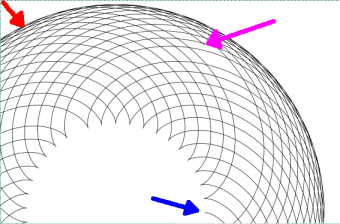

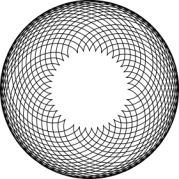

From a distance the spirograph is presented as a whole, but up close we see the individual strokes, the overlap, the intersections, the flaws and gaps. A teacher has that micro view – looking at each student’s progression, their pathway to learning – seeing the gaps and the flaws. She can retrace the efforts to find out what went wrong and work with the student to improve the outcomes. At the end of the course, she can present conclusive data including artifacts as evidence of each student’s level of achievement. There will be gaps or flaws. There should be gaps and flaws.

The imperfections of student learning and imperfections in teaching are precisely why “efficacy” evaluations are important and valuable. No matter how many times we use the same tools and the same techniques the outcomes vary (if you have ever done Spirograph, you know what I mean). Because the student group we have in the classroom and the world they live in is always changing we absolutely cannot commit to one method, one unchanging set of outcomes, one expected level of achievement. The gaps and flaws are not a sign that we did it wrong – they are an opportunity for reflection on practice (what am I trying to do here?), reflection on experience (in what ways did un/intentionally changing the way I do things have an impact?) and an opportunity for change (if I used different tool, app, setting, or re-ordered the lessons what would happen?). Efficacy evaluation gives us a chance to respond to changing needs. With a persistent evaluation plan in place the variances associated with responsive course design will be not only understood but expected.

A program director department chair has a macro view, looking at the bigger picture – overall, did we produce what we aimed to produce? Because the instructor has created lessons, activities and assessments that all map to the outcomes (right?) it is easy to zoom in and examine each stroke, each detail, to consider the gaps or flaws and understand how every anomaly relates to the norm. Educators know not every student will achieve the same level of mastery for every outcome. We want to see outliers.

A program director department chair has a macro view, looking at the bigger picture – overall, did we produce what we aimed to produce? Because the instructor has created lessons, activities and assessments that all map to the outcomes (right?) it is easy to zoom in and examine each stroke, each detail, to consider the gaps or flaws and understand how every anomaly relates to the norm. Educators know not every student will achieve the same level of mastery for every outcome. We want to see outliers.

We’re not looking for perfection in an efficacy evaluation – we are looking for patterns in failure and success, looking for consistencies as well as inconsistencies. Those “gaps” or “flaws” are evidence of change in the pattern, and further evaluation will determine if it’s an anomaly or a recurring pattern. We can take a closer look to determine the cause and effect (test performance fell/note taking declined/students were using tablets in class) and use that information to create an intervention (add a lesson on tablet note taking; encourage students to try collaborative note taking).

One of the problems I see with course level learning outcomes is an expectation that students will achieve mastery on the first attempt and that there are often minimal attempts at an outcome. Evaluating hundreds of courses each year, I look at thousands of different course activities, assignments, projects and quizzes it is striking to see how frequently activities map back to multiple outcomes and few outcomes map out to multiple activities. In other words, I see courses with dozens of outcomes but only a handful of linked activities. What I see is a push to apply the concept of outcomes in a course without a framework for students to make increasingly challenging attempts at mastery.

I like Pearson’s idea (as interpreted by Michael in his blog post and my interpretation of that interpretation). I like the idea of evaluating for efficacy. It forces us to consider: “This small piece makes sense, but in what ways does it make sense in the bigger picture?” If it doesn’t push the student to increasingly difficult levels of mastery are we settling for mediocrity? A course assignment can be very well-developed and provide great learning opportunity, but how does it connect to other outcomes in that course, in other courses, and to student knowledge and ability overall? If it doesn’t have an effect on the bigger picture, does it matter how great it is? is it even needed?

An institution or accrediting body will look at a variety of programs, each unique in color, shape, definition. What they are looking for, on a high level, is consistency, clarity, congruence with the mission. They can focus on department or program and take a closer look, examining the definition, the quality. They can zoom in closer to examine each individual stroke, examine the flaws. An evaluator wouldn’t expect to see the same patterns across programs and departments – they all have unique missions – but there should be similar quality.

An institution or accrediting body will look at a variety of programs, each unique in color, shape, definition. What they are looking for, on a high level, is consistency, clarity, congruence with the mission. They can focus on department or program and take a closer look, examining the definition, the quality. They can zoom in closer to examine each individual stroke, examine the flaws. An evaluator wouldn’t expect to see the same patterns across programs and departments – they all have unique missions – but there should be similar quality.

Because the teacher and program director or dept chair has done their job in defining the outcomes, gathering data and artifacts, zooming in to examine the details can be done at this level. Creating outcomes and associating them with learning activities and resources isn’t necessarily difficult. Weaving together all the elements in a purposeful and meaningful way across the curriculum is challenging. Applying ongoing evaluation is important, no matter how costly it is; otherwise, it is too easy to do it once, call it done, and walk away. The learners in our classrooms are changing, the tools they use are changing, and the methods applied by teachers change (however minutely) and this all impacts the outcomes. If frequent evaluation is not applied, we miss the chance to incorporate those changes effectively (ahem, efficacy) and monitor the degree of the effects (ahem, yeah).

What I have been reading in preparation for the upcoming semester connects to this, hence my spending New Year’s Day chewing on these ideas (and Chinese food) and mulling over Michael’s blog post.

In Remaking the American University there is a great chapter on quality and accountability in education (Making Education Quality Job One, Ch. 9). Zemsky, Wegner and Massy challenge us to define quality in education (not as easy as it sounds) and hold ourselves accountable for it with attention to the changes happening in society and in higher education. American colleges and universities have been in a near crisis for the past decade struggling to respond to market changes without losing sight of their institutional missions. Schools that cling to what worked in the past are falling behind in what’s being asked for today. Schools that jumped into a quick return investment may have lost some of their higher ed street cred (how many accelerated online MBA programs do we really need?). Schools that wait and see what their peers will do are missing out on a chance to make their name as an innovator. Change is hard – but a school that has a built-in ready to go strategy to identify weaknesses and identify opportunities to rebuild their program is better poised to respond to the changing market. Efficacy evaluations mean asking the tough questions and holding ourselves responsible for the outcomes of those evaluations. Evaluations mean little without subsequent action.

We have seen in colleges that fail common problems – too many programs draining resources, declining enrollment, decreased revenue. In colleges that have come back from the brink of failure, there has usually been a complete overhaul and massive change – they cut programs, let faculty go, terminate degrees, and destroy traditions but they also create new programs, bring in new faculty, create new degrees and begin new traditions. Change is not revered at most institutions; change is usually avoided. Evaluation programs are not appreciated because they are often misunderstood as criticism and can lead to change. It is understandable that institution-wide evaluation programs – especially ones that question efficacy – would not be welcomed. I think that fear or uncertainty is even more reason to do it. Complacency is not a 21st century skill.